Alias-Free Generative Adversarial Networks

原文链接

Alias-Free Generative Adversarial Networks. CoRR abs/2106.12423 (2021)

摘要

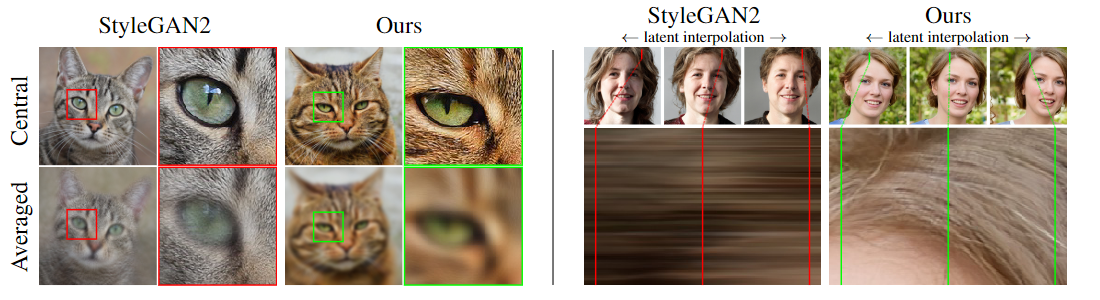

我们观察到,尽管它们具有层次卷积性质(hierarchical convolutional nature),但典型的生成对抗网络的合成过程以一种不健康的方式依赖于绝对像素坐标。这表现为,例如,细节似乎粘在图像坐标上,而不是描绘对象的表面。我们将根本原因追溯到粗心的信号处理,这会导致生成器网络出现混叠(aliasing)。我们将网络中的所有信号解释为连续的,从而得出普遍适用的小型架构更改,以确保不需要的信息不会泄漏到分层合成过程中。由此产生的网络与StyleGAN2的FID相当,但在其内部表示(internal representations)上存在显著差异,即使在亚像素尺度上(subpixel scales),它们在平移和旋转上也是等变的(equivariant)。我们的结果为更适合视频和动画生成的模型铺平了道路。

1 Introduction