Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space?

原文链接

Image2StyleGAN: How to Embed Images Into the StyleGAN Latent Space? ICCV 2019: 4431-4440

摘要

我们提出了一种将给定图像嵌入StyleGAN的潜在空间的有效算法。这种嵌入使语义图像编辑(semantic image editing)操作能够应用于存在的照片。以在FFHD数据集上训练的StyleGAN为例,我们展示了图像变形(image morphing),风格迁移和表情迁移的结果:通过研究嵌入算法的结果,可以深入了解StyleGAN的潜在空间的结构。我们提出了一组实验来测试什么类型的图像可以被嵌入,它们是如何被嵌入的,什么样的潜在空间适合嵌入,以及嵌入是否具有语义意义(semantically meaningful)。

1. Introduction

Generative Adverserial Networks (GANs) are very successfully applied in various computer vision applications, e.g. texture synthesis [18, 33, 28], video generation [31, 30], image-to-image translation [11, 36, 1, 24] and object detection [19]. In the few past years, the quality of images synthesized by GANs has increased rapidly. Compared to the seminal DCGAN framework [25] in 2015, the current state-of-the-art GANs [13, 3, 14, 36, 37] can synthesize at a much higher resolution and produce significantly more realistic images. Among them, StyleGAN [14] makes use of an intermediate W latent space that holds the promise of enabling some controlled image modifications. We believe that image modifications are a lot more exciting when it becomes possible to modify a given image rather than a randomly GAN generated one. This leads to the natural question if it is possible to embed a given photograph into the GAN latent space.

To tackle this question, we build an embedding algorithm that can map a given image $I$ in the latent space of StyleGAN pre-trained on the FFHQ dataset. One of our important insights is that the generalization ability of the pre-trained StyleGAN is significantly enhanced when using an extended latent space $W^+$ (See Sec. 3.3). As a consequence, somewhat surprisingly, our embedding algorithmis not only able to embed human face images, but also successfully embeds non-face images from different classes. Therefore, we continue our investigation by analyzing the quality of the embedding to see if the embedding is semantically meaningful. To this end, we propose to use three basic operations on vectors in the latent space: linear interpolation, crossover, and adding a vector and a scaled difference vector. These operations correspond to three semantic image processing applications: morphing, style transfer, and expression transfer. As a result, we gain more insight into the structure of the latent space and can solve the mystery

why even instances of non-face images such as cars can be embedded.

Our contributions include:

- An efficient embedding algorithm which can map a given image into the extended latent space $W^+$ of a pre-trained StyleGAN.

- We study multiple questions providing insight into the structure of the StyleGAN latent space, e.g.: What type of images can be embedded? What type of faces can be embedded? What latent space can be used for the embedding?

- We propose to use three basic operations on vectors to study the quality of the embedding. As a result, we can better understand the latent space and how different classes of images are embedded. As a byproduct, we obtain excellent results on multiple face image editing applications including morphing, style transfer, and expression transfer.

2. Related Work

High-quality GANs Starting from the groundbreaking work by Goodfellow et al. [8] in 2014, the entire computer vision community has witnessed the fast-paced improvements on GANs in the past years. For image generation tasks, DCGAN [25] is the first milestone that lays down the foundation of GAN architectures as fully-convolutional neural networks. Since then, various efforts have been madeto improve the performance of GANs from different aspects, e.g. the loss function [21, 2], the regularization or normalization [9, 23], and the architecture [9]. However, due to the limitation of computational power and the shortage of high-quality training data, these works are only tested with low resolution and poor quality datasets collected for classification / recognition tasks. Addressing this issue, Karras et al. collected the first high-quality human face dataset CelebA-HQ and proposed a progressive strategy to train GANs for high resolution image generation tasks [13]. Their ProGAN is the first GAN that can generate realistic human faces at a high resolution of 1024 × 1024. However, the generation of high-quality images from complex datasets (e.g. ImageNet) remains a challenge. To this end, Brock et al. proposed BigGAN and argued that the training of GANs benefit dramatically from large batch sizes [3]. Their BigGAN can generate realistic samples and smooth interpolations spanning different classes. Recently, Karras et al. collected a more diverse and higher quality human face dataset FFHQ and proposed a new generator architecture inspired by the idea of neural style transfer [10], which further improves the performance of GANs on human face generation tasks [14]. However, the lack of control over image modification ascribed to the interpretability

of neural networks, is still an open problem. In this paper, we tackle the interpretability problem by embedding user-specified images back to the GAN latent space, which leads to a variety of potential applications.

潜在空间嵌入(Latent Space Embedding) 一般来说,有两种现有方法可以将实例从图像空间嵌入到潜在空间:i) 学习一个将给定图像映射到潜在空间的编码器(例如,可变自动编码器(Variational Auto-Encoder)[15];ii) 选一个随机初始潜在编码,并使用梯度下降法对其进行优化[35, 4]。其中,第一种方法通过对编码器神经网络进行向前传递,提供了一个图像嵌入的快速解决方案。然而,在训练集之外,它存在着泛化性不足的问题。在本文中,我们决定将第二种方法作为更一般、更稳定的方案。

感知损失(Perceptual Loss)和风格迁移 传统上,两幅图像之间的低层次相似度是在像素空间中用$L1 / L2$损失函数来衡量的。而在过去几年中,受复杂图像分类[17, 20]成功的启发,Gatys等人[6, 7]观察到VGG图像分类模型[20]学习到的滤波器是优秀的通用特征提取器,并建议使用提取的特征的协方差统计来感知地测量图像之间的相似性,然后被归结为(formalized)感知损失[12, 5]。为了证明他们的方法的能力,在风格迁移[7)]中他们显示出了有前景的结果。具体来说,他们认为,VGG神经网络的不同层在不同尺度上提取图像特征,可分为内容(content)和风格(style)。为了加速初始算法,Johnson等人提出训练一个神经网络来解决[7]中的优化问题,它可以实时地将给定图像风格转换到任何其他图像上。他们的方法唯一的限制是,他们需要为不同风格的图像训练单独的神经网络。最后,Huang和Belongie[10]通过自适应实例规范化解决了这个问题。因此,他们可以实时迁移任意风格。

3. 哪些图像可以被嵌入StyleGAN的潜在空间

We set out to study the question if it is even possible to embed images into the StyleGAN latent space. This question is not trivial, because our initial embedding experiments with faces and with other GANs resulted in faces that were no longer recognizable as the same person. Due to the improved variability of the FFHQ dataset and the superior quality of the StyleGAN architecture, there is a renewed hope that embedding existing images in the latent space is possible.

3.1. 不同类型图像的嵌入结果

…

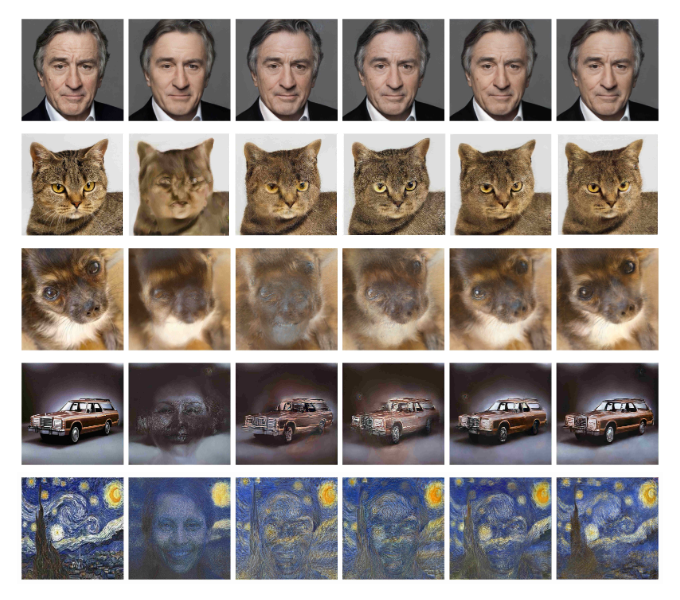

Going beyond faces, interestingly, we find that although the StyleGAN generator is trained on a human face dataset, the embedding algorithm is capable to go far beyond human faces. As Figure 1 shows, although slightly worse than those of human faces, we can obtain reasonable and relatively high-quality embeddings of cats, dogs and even paintings and cars. This reveals the effective embedding capability of the algorithm and the generality of the learned filters of the generator.

…

3.2. 人脸图像嵌入有多健壮?

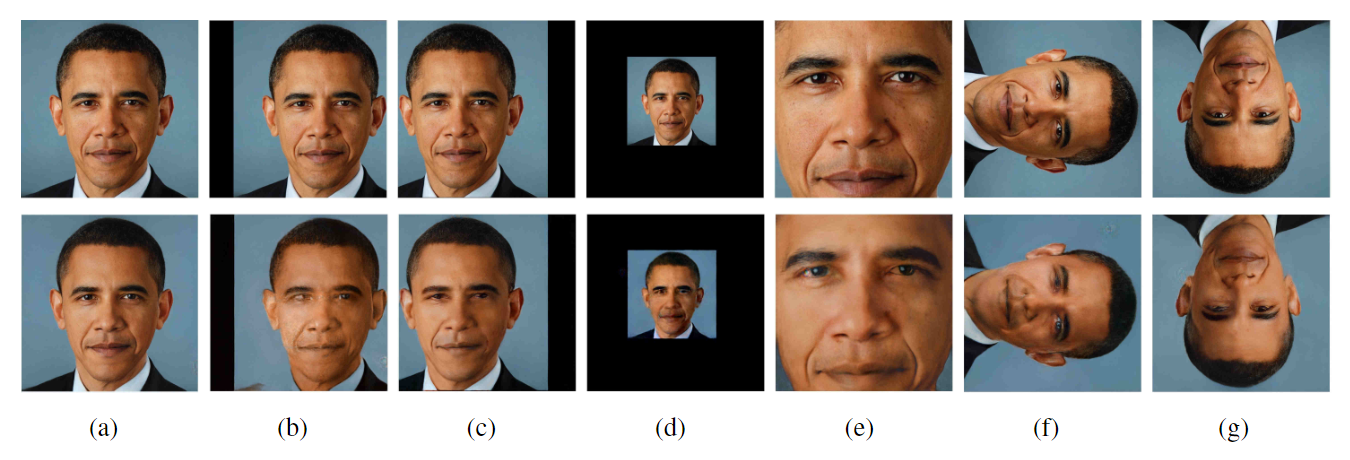

仿射变换 如Figure 2和Table 1所示,StyleGAN的嵌入的性能对仿射变换(平移,调整大小和旋转)非常敏感。Among them, the translation seems to have the worst performance as it can fail to produce a valid face embedding. For resizing and rotation, the results are valid faces. However, they are blurry and lose many details, which are still worse than the normal embedding. From these observations, we argue that the generalization ability of GANs is sensitive to affine transformation, which implies that the learned representations are still scale and position dependent to some extent.

![Table 1: Embedding results of the transformed images. L is the loss (Eq.1) after optimization. ‖ω∗ − ̄w‖ is the distance between the latent codes ω∗ and ̄w (Section 5.1) of the average face [14].](/images/StyleGAN-Encoder/Table 1.png)

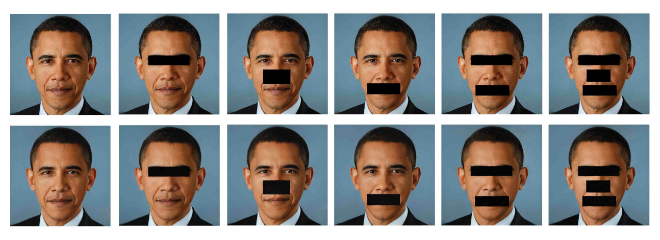

嵌入缺陷(Defective)图像 As Figure 3 shows, the performance of StyleGAN embedding is quite robust to defects in images. It can be observed that the embeddings of different facial features are independent of each other. For example, removing the nose does not have an obvious influence on the embedding of the eyes and the mouth. On the one hand, this phenomenon is good for general image editing applications. On the other hand, it shows that the latent space does not force the embedded image to be a complete face, i.e. it does not inpaint the missing information.

3.3. 选择哪个潜在空间?

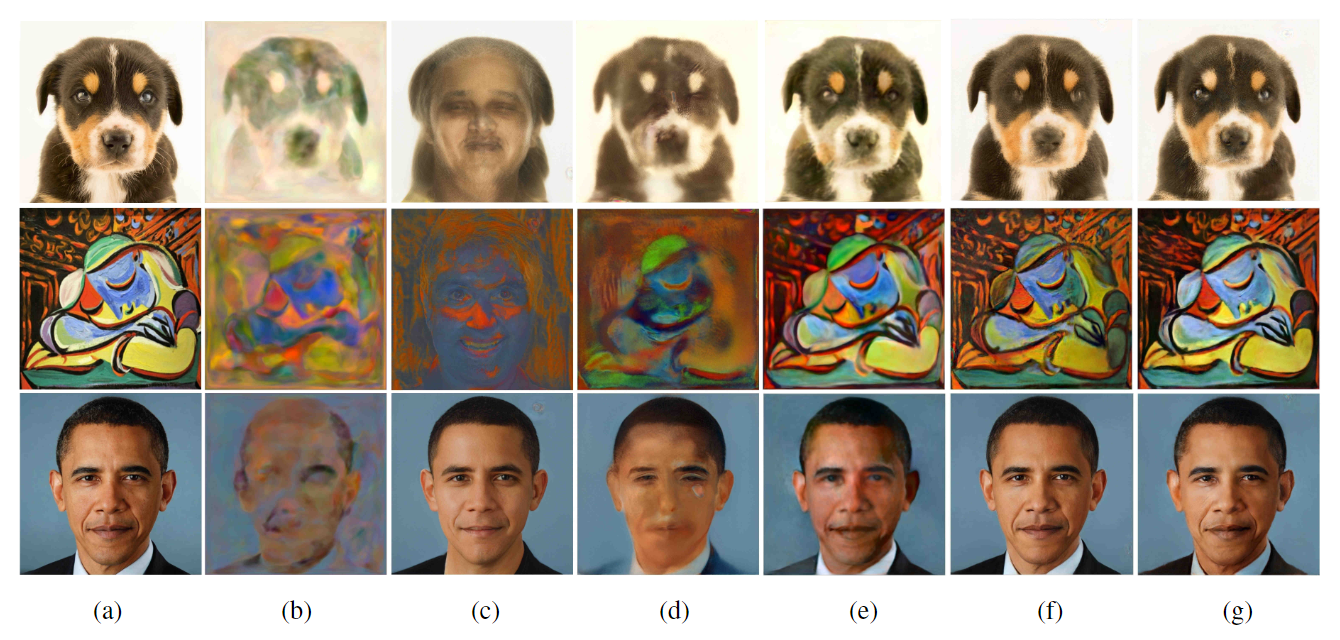

There are multiple latent spaces in StyleGAN [14] that could be used for an embedding. Two obvious candidates are the initial latent space $Z$ and the intermediate latent space $W$ . The 512-dimensional vectors $\omega \in W$ are obtained from the 512-dimensional vectors $z \in Z$ by passing them through a fully connected neural network. An important insight of our work is that it is not easily possible to embed into $W$ or $Z$ directly. Therefore, we propose to embed into an extended latent space $W^+$. $W^+$ is a concatenation of 18 different 512-dimensional $w$ vectors, one for each layer of the StyleGAN architecture that can receive input via AdaIn. As shown in Figure 5 (c)(d), embedding into $W$ directly does not give reasonable results. Another interesting question is how important the learned network weights are for the result. We answer this question in Figure 5 (b)(e) by showing an embedding into a network that is simply initialized with random weights.

4. 嵌入有多大意义?

5. 嵌入算法

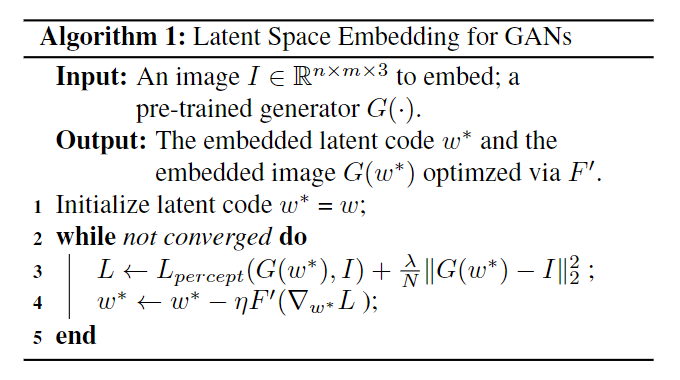

我们的方法遵循一个简单的优化框架 [4],将给定的图像嵌入预训练的生成器的流形(manifold)中。从一个合适的初始值$\omega$开始,我们搜索一个优化向量$\omega^\ast$可以使衡量给定图像和由$\omega^\ast$生成的图像之间的损失函数最小化。Algorithm 1展示了我们方法的伪代码。这项工作的一个有趣的方面是,并不是所有的设计选择都能带来好的结果,而且对设计选择的实验可以进一步地了解嵌入。

5.1. 初始化

我们研究了初始化的两种设计选择。第一种选择是随机初始化。在这种情况下,每个变量独立地采样于均匀分布$\mathcal{U}[-1, 1]$。第二种选择的动机是,观察到距平均潜在向量$\overline\omega$的距离可以用来区分低质量的人脸 [14]。因此,我们提出用$\overline\omega$作为初始化,并期望优化收敛得到更接近$\overline\omega$的$\omega^\ast$。

为了评估这两种设计选择,我们比较了优化后的潜在编码$\omega^\ast$和$\overline\omega$之间的损失值和距离$||\omega ^* - \overline\omega||$。如Table 2所示,在人脸图像嵌入中将$\omega$初始化为$\overline\omega$不仅使优化后的$\omega^\ast$更接近$\overline\omega$,而且实现了更小的损失值。然而,对于其他类别的图像(例如狗),随机初始化被证明是更好的选择。直觉上,这一现象表明了该分布只有人脸的聚簇(cluster),其他的实例(例如狗,猫)是围绕着聚簇没有明显模式(pattern)的散点。定性结果如Figure 5 (f)(g)所示。

![Table 2: Algorithmic choice justification on the latent code initialization. ω Init. is the initialization method for the latent code ω. L is the mean of the loss (Eq.1) after optimization. ‖ω∗ − ̄w‖ is the distance between the latent codes ω∗ and ̄w of the average face [14].](/images/StyleGAN-Encoder/Table 2.png)

5.2. 损失函数

为了在优化过程中测量输入图像和嵌入图像的相似性,我们采用了一个损失函数,它是VGG-16感知损失[12]和像素级MSE损失的加权组合:

其中$I \in \mathbb{R}^{n \times n \times 3}$是输入图像,$G(\cdot)$是预训练的生成器,$N$是图像中的标量(scalar)数(即$N = n \times n \times 3$),$\omega$是要优化的潜在编码,$\lambda_{mse} = 1$是根据经验获得的,以获得良好的性能。对于Eq.1中的感知损失项$L_{percept}(\cdot)$,我们使用:

其中$I_1, I_2 \in \mathbb{R}^{n \times n \times 3}$是输入图像,$F_j$分别是是VGG-16的层$conv1_1$,$conv1_2$,$conv3_1$和$conv4_2$的输出,$N_j$是$jth$层输出的标量数,对于所有的$js$,都取经验值$\lambda_j = 1$以获得良好的性能。

我们对感知损失和像素级MSE损失的选择来自这样一个事实,即仅凭像素级MSE无法找到一个高质量的嵌入。因此,感知损失充当了某种正则化工具,将优化引导到潜在空间的正确区域。

我们进行了消融研究(ablation study),以证明我们在Eq.1 中损失函数选择的合理性。如Figure 9所示,仅使用像素级的MSE损失项(第2列)可以很好地嵌入整体颜色,但无法捕捉到一些非人脸图像的特征。此外,它还具有平滑效果,即使是人脸也无法保留细节。有趣的是,由于像素空间中有像素级MSE损失在起作用,并且忽略特征空间中的差异,其在非人脸图像(例如汽车和绘画)上的嵌入结果趋向于预训练的StyleGAN[14]的平均脸。这个问题被感知损失(第3,5列)解决,感知损失在特征空间中衡量图像的相似性。由于我们的嵌入人物要求嵌入图像在所有尺度上都要接近输入,我们发现在VGG-16网络中的多个层匹配特征(第5列)比只使用单个层(第3列)效果更好。这进一步促使我们将像素级MSE损失和感知损失(第4,6列)结合起来,因为像素级MSE损失可以被视为是最低层次的在像素尺度的感知损失。Figure 9的第6列展示了我们最终选择的结果(像素级MSE损失 + 多层感知损失),它在不同算法选择中实现了最佳性能。